I build software using semantic technologies. As such, ontologies are at the heart of what I do. For me, ontologies serve two very different roles, and these can often be in conflict. The two roles that I observe are as follows:

- Collaborative ontologies: Initially for domain modelling discussion, with the eventual aim of standardisation, either across or within organisations

- Embedded ontologies: Form an integral part of a working software system, modelling both the domain, and other application-specific data structures

Below I have described some further characteristics I have observed of these two roles:

Collaborative ontologies

- An attempt to model an entire domain

- Discussed by a wide community, who can present edge-cases and suggested changes

- Change slowly (or never) by necessity, allowing people to publish data without the model becoming out-of-date

- A tendency to model more so a majority of potential users’ requirements are met

- Designed in advance of use

- Aspires to be a truthful representation of the domain

- An academic approach, whereby ontologies are published by authors

- Equate to the ‘vision’ in agile software development terms

Embedded ontologies

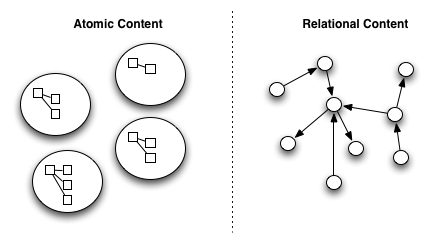

- An attempt to model parts of a domain used with a software system

- Discussed by the community using the system

- Change frequently, in response to the software design and the requirements of the system

- An MVO (minimal viable ontology), to match how the ontologies are used in the system

- Designed in response to requirements of the system

- Required to balance the demands of a software system, and the desire to be a truthful representation of the domain. For example, performance considerations

- An engineering approach, whereby ontologies are emergent

- Equate to the actual software in agile software development terms

In short, I would describe the most fundamental difference as follows:

- Collaborative ontologies: designed to change as little as possible

- Embedded ontologies: designed to be to changed as easily as possible

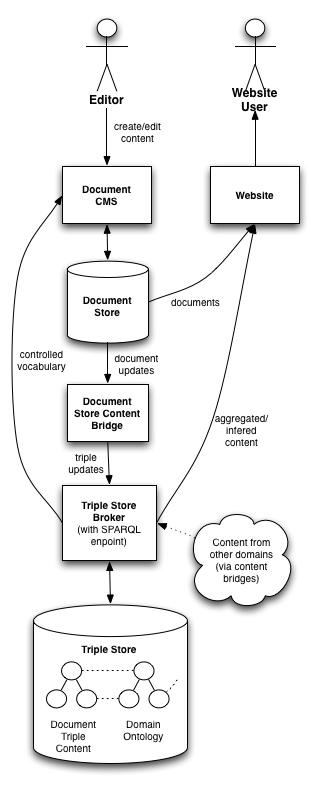

The idea of an embedded ontology might be less familiar, as they are certainly less common. For me, they are simply the data model of a software system, expressed using linked data. This is obviously very much the case where linked data technologies are used, such as Triple Stores or RDF, to power the software.

I think both roles are important and necessary, however the rest of this post is focused on demonstrating the need for embedded ontologies, because these are less widely used and understood.

Relationship between collaborative & embedded ontologies

Most commonly, the distinction is not made collaborative and embedded ontologies, and the conflict takes place over a single ontology. I believe a more productive approach would be to separate the embedded and collaborative ontologies, and to allow each to develop in response to it’s own pressures. The differences that emerge can inform a dialogue between abstract modelling and delivery software.

The use-case I will now present should demonstrate how this separate embedded ontology works in practice.

Embedded ontology use-case: A Sport app

To explain how embedded ontologies work, I will give an example: a software application in the sport domain.

First, to contrast, a collaborative sport ontology would be an attempt to model the domain of sport, comprising teams, sportspeople, disciplines, and perhaps sponsorship, venues, and fixtures.

Let us now assume I wanted to build a software system based around the domain of sport. My first question would be ‘what’s the MVP (minimal viable product)?’. I would hope to get an answer such as “A mobile app showing a list of teams in each English football division”. How would I go about building this? Well, good domain-driven-design would lead me to use a good model and language to describe the parts of my software. I might have a /teams endpoint in an API. I might build a TeamList component in the Javascript code. What I would not do, is attempt to model sportspeople, disciplines, sponsorship, venues and fixtures. I don’t need these yet. What I need is a Minimal Viable Ontology.

This used to be a problem, where SQL database schema were designed in advance of writing any software. Then agile came along, along with Lean, TDD, BDD and so on. These approaches showed that these upfront design approaches usually resulted in the wrong architectural design, badly modelled data structures, features nobody wants and unnecessary complexity.

So, whilst I believe that collaborative ontologies serve an important role in fostering collaboration in linked data, I also believe that we need strategies to make these approaches compatible with embedded ontologies, and therefore software engineering best practice.

Using ontologies in software

I want to now talk about some approaches to building embedded ontologies, used within software. As you will see, these contrast in a number of significant ways with approaches to building ontologies within academic communities.

These approaches are not intended to supercede collaborative ontologies, but instead, help reduce the friction between collaborative and embedded ontologies. And, therefore, between those building software using linked data, and those building models in academia.

Separate embedded & collaborative ontologies

A good first step is to get a distinct understanding of what constitutes the embedded ontology in-use when compared with the collaborative ontology. This could be done in a number of ways:

- No collaborative ontology until necessary: For me, this is the most important. I believe the preemptive publishing of collaborative ontologies can be counter-productive; if an ontology can remain private and embedded until the software has been proven to work, then it will be a higher-quality representation of the domain. This adheres to the software principle that the best design is the design that emerges in response to iterative requirements. From my software perspective, a published and shared ontology is like an Open API, whilst the benefits of sharing can be huge, you have potentially also lost one of the most important capabilities in building software: the ability to change frequently and easily.

- Modularisation: I would suggest this as a key second step. Breaking ontologies down into smaller parts, particularly in response to growth. This will reduce the rate at which these modules change individually when compared with the whole. This technique can avoid the necessity for mappings (see below).

- Mapped: Where a clear mapping between the ontologies is expressed (perhaps using semantic equivalence). This comes with the overhead of maintaining two ontologies and a mapping.

Modularised ontologies

One issue with ontologies is the common practice of attempting to model a single ‘domain’ with a single ontology. A selling point for this is the ability to “work within a single namespace”. I think that this practice is counter-productive, particularly with regards to aligning collaborative and embedded ontologies.

If a modularised approach is taken, a number of options become available. If an ontology can be broken into a number of parts, with references between them, the parts can be assigned metadata to indicate the following:

- Stability: For example, it should be possible to add a new ‘ontology module’ which is entirely experimental. In sport, this could be a ‘sponsorship’ module which is up for discussion in the community, but forms no part of the working software.

- Module version: By separately versioning ontology modules, stability can be achieved within some modules, whilst others regularly change.

- Equivalence/alternatives: Alternative or equivalent modules could exist if different perspectives on the same domain exist.

Dependency management between modules

Whilst I am aware that work has been done in this area, it has not matured to the level seen with library dependency management in software (e.g. Maven, Ivy etc). It is straightforward to build dependency graphs between ontologies, but the more subtle version-specific dependency management is not readily available. More efficient tooling, and a consensus on meta-ontologies in this area would lower the bar for fine-grained modularisation of ontologies.

Finally

It should now be clear that embedded ontologies are a by-product of software delivery. This in my view, is exactly how it should work (as long as the embedded ontologies are curated and crafted as the software grows, using the same principles applied to collaborative ontology design). Using this approach, I would suggest that a higher-quality, more robust ontology may emerge. It will be an ontology that has been road-tested by the necessity to deliver software that serves a particular audience. Perhaps this particular audience will skew the perspective of the ontology, but any model is, after all, just a perspective.

Once this ontology has undergone this growth, and subsequent road-testing, this is where the dialogue can get really interesting between a fit-for-purpose embedded ontology, and a collaborative ontology. Both will have much to offer, but I feel the benefits will flow in both directions.

I would be keen to hear if any of this reflects your own experiences, and, in particular, to get the perspective of individuals working exclusively on collaborative ontologies.